Academics: You're Doing Open Source Wrong

Cake with melted plastic lego pieces, delicious. Generated by Midjourney

I recently started a PhD in Computer Science after spending the past 10 years working as a software engineer. One of the biggest shocks to me in this transition (aside from how incompetent I am as a researcher) has been the apalling state of code that accompanies published research papers. Usually when I complain about academic code, people think I’m just talking about code quality being poor (which it is), but it’s much deeper than that. The way code is open-sourced in most academic papers is typically completely broken, and shows a deep misunderstanding about what code is for, and what open-source is about.

Imagine you invite some friends to your apartment, and one of them brings a cake they baked. When you try to eat the cake, you find that it has melted plastic lego pieces in it. Shocked, you point this out to your friend, who just replies, “Oh, I’m not good at cooking.” You then realize your friend has a misunderstanding at a fundamental level about what cooking is for, and what food even is. The code that accompanies research papers is like that cake - it fails at the most basic thing code is meant to do, which is to run and be usable.

In this post, we’ll go over what I view as the problem, and share tips for academics on how to do a better job of open-sourcing their code. I’ll be focusing on Python, as that’s mainly what’s used in AI research, but a lot of this will apply to other languages as well. First and foremost, we’ll focus on the big picture of making the code fit for human consumption, and then we’ll go over how to improve the taste, aka code quality.

The current state of academic code is both a travesty and a huge missed opportunity. The few cases I’ve seen where research code is properly packaged and is made easy to use, both the repo and corresponding paper get massive numbers of citations and wide usage. In this article, I hope to show that doing a good job of open-sourcing research code is worth the effort and that it’s not difficult.

The problematic academic mindset

Most code I encounter that’s released as part of academic papers is completely broken, as in it’s not possible to run the code as provided at all. This is typically due to things like missing files the researcher forgot to upload, or hardcoded file paths to stuff on the researcher’s own machine, missing documentation, or not pining Python dependency versions. This shows that the researcher never even tried running the code they open-sourced at all, and instead just copied and pasted some files from their local hard-drive into a Github repo, linked the repo in their paper, and high-fived everyone for a job well done. There is no notion that other people are going want to actually try to run that code, and that by uploading broken code and advertising it in a paper you are directly wasting thousands of hours of other people’s time.

Academics are not bad people, and I don’t believe they’re intentionally being malicious. Instead, I think the mindset of most researchers towards open-source code is the following:

- Putting Python files in a Github repo is just a way to make a paper seem more legit, since if there’s code then people will believe the results.

- Code is just for looking at to get an idea of how something is implemented, not for running. It’s like an extended appendix to the paper.

The problem with the academic mindset to open-sourcing code above is that it misses the core thing that code is for, which is for actually running and accomplishing a task.

You want other people to use your code in their work

As a researcher, success means having your work widely cited and used by other researchers. One of the most direct ways to accomplish that is for other researchers to use your code in their work. If you make your code easy to use and package it properly, other researchers will use it and then cite your papers. If your code is completely broken or not usable due to not being packaged properly, nobody will use it. Other researchers want to use your code too - it’s a win-win for everyone if research code is open-sourced properly.

DO NOT PUBLISH BROKEN CODE!!!

It should go without saying, but it’s not OK to publish code that’s completley broken. Your paper is a giant advertisement for your code, and people who read your paper will naturally go to the repo you link and try running what they find there. If the code is broken, you are collectively wasting thousands of hours of other people’s time. Typically, when research code is broken, I find it’s for one of the following reasons:

- The researcher never actually tested what they published: It seems like in a lot of cases, researchers simply copy/paste files from their local hard disk into a git repo, and never bother to actually check if what they uploaded is complete and can run following the instructions they give. There are often missing files, or references to hardcoded file paths on the researcher’s own hard disk. Avoiding broken code like this is a simple question of actually testing what you publish. This is a bare minimum which would only take a few mintues, but will save everyone else who reads your paper a lot of pain.

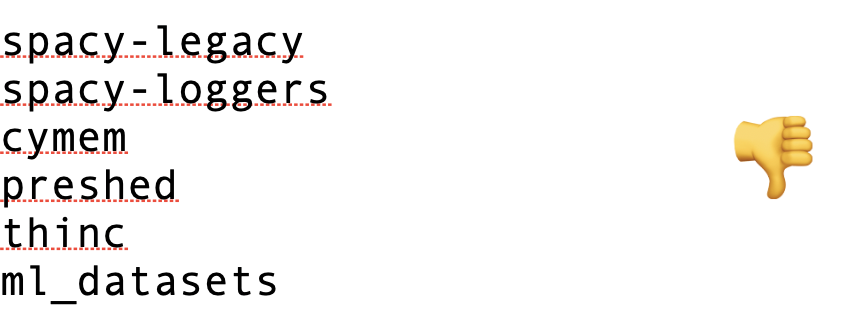

- Dependency versions are not pinned: If you rely on other Python libraries and don’t pin a version range, your code is guaranteed to break in 6 months when one of those dependencies makes a breaking change. Don’t do this. It’s incredibly frustrating needing to go on an archeological dig through PyPI trying to guess what version of each dependency the researcher was probably using.

Release a library, not a collection of files

After ensuring that the code is actually working, the next most important thing is packaging it properly so others can use it in their work. Ideally, your goal should be to release a library which does the thing in your paper, not a pile of random Python files.

Python libraries should be packaged and released on PyPI

If your code is just a bunch of Python scripts in a Github repo, it’s nearly impossible for other people to use that code in their work. What are they supposed to do, copy and paste files from your repo onto their hard drive? Are they supposed to open up the files and copy/paste individual chunks of Python code out? Nobody is going to do that. Fortunately, there’s a well-established way to import code into a Python project which makes it easy for your code to be used by others, and that’s for the code to packaged as a library on PyPI. This lets it be installed with pip install <your-library-name>.

The idea of releasing a library might sound daunting, but it’s really easy once you get used to it. The difference is a code organization question more than anything else, and some basic thought put to “what would someone want to do with this code?”. Once you’ve learned to package code into a library you’ll see that doing a decent job of packaging your code is far easier than learning LaTeX, or writng a paper, or finding a research idea to begin with. We’ll discuss how to make this easy in the section on Poetry later in the article.

A Good Repo™

The git repo for your code should have the following components:

- A basic README explaining how to use the library, which includes the following:

- An Installation section, which says

pip install your-awesome-library - Basic usage instructions, like:

from your_awesome_library import do_awesome_thing result = do_awesome_thing(input)

- An Installation section, which says

- The library should be usable in Python, NOT (only) via running a Python script

- It needs to work

… and that’s basically it. If do this, your code should be easy for others to use and you’re already better of 95% of the open-source code released by researchers.

What about reproducing the results in my paper? Shouldn’t that be the main point of open-sourced code?

By all means, do include code to reproduce the experiments in your paper, as reproducability of results is important. However, recognize that the majority of users of your code won’t want to reproduce your results, so it shouldn’t be the main focus. It’s fine to include an experiments folder in your git repo for reproducing your results that’s not published to PyPI, or even split apart the experiments into a separate git repo from the reusable library code so the library can evolve separately. If you take the approach of splitting the repos, then the experiments repo can import the library as a normal pip dependency, which also has the bonus of verifying that your library works when installed as a dependency in other projects. This leads naturally to the next point:

It’s OK to publish multiple repos per paper

If your paper has several distinct components, each of which could be used independently as its own library, there’s nothing wrong with releasing multiple open-source repos or libraries along with your paper. Your goal should be to make your code useful to others, and you might find that it’s more natural to release 2 or even 3 different libraries so the various parts of your paper can be used independently rather than trying to fit everything into 1 library. There’s no rule that says every paper must correspond to 1 and only 1 git repo. If splitting into separate libraries makes it easier for others to use, go for it!

What are some examples of this done well?

The best examples of researchers releasing their code are also some of the best known projects in the field. I don’t believe this is a coincidence - if you package your code properly and release it on PyPI, then others will use it in their own projects and cite your paper. Two excellent examples that come to mind are the following:

FlashAttention

FlashAttention is a beautiful illustration of how you don’t need to overthink this to do a good job. This repo has a simple README with installation of the library via pip install flash-attn and basic instructions on how to use it in Python. There’s a benchmarks folder in the repo to reproduce the results in the paper, but it’s not the main focus of the library. The library itself is simple and focused. A+

Sentence Transformers

Sentence Transformers goes above and beyond, including a documentation website and continues to evolve and add pretrained models to the library. This library corresponds to an original paper by the author on a technique for sentence similarity, but was just packaged well and focused on ease of use, and the author clearly has put a lot of care into this library.

Both of these libraries were created by individual researchers along with their papers, by a PhD student in the case of FlashAttention, and a postdoc in the case of Sentence Transformers. In both these cases, the authors could have just copy/pasted a collection of unusable Python scripts into a Github repo and left it at that, but then likely neither of their papers would have achieved anywhere near the level of success they both have seen. I believe the level of polish that these libraries show is very achievable for all academics, and should be the norm rather than the exception.

Poetry: packaging and dependency management made easy

Personally, I like using Poetry for managing Python projects. Poetry handles a lot of the complexity of virtual environments for Python, dependency management, and finally, publishing your library to PyPI so it can be installed with pip install <your-library>. Poetry isn’t the only way to do this, but it provides a good foundation.

Let’s assume we’re the authors of a paper about dog image classification using a technique called “DogBert”. We could start by making a new Poetry project:

poetry new dogbert

This will give us the following file structure:

dogbert

├── README.md

├── dogbert

│ └── __init__.py

├── pyproject.toml

└── tests

└── __init__.py

It may seem confusing that there’s 2 nested folders, both named dogbert, but this is a standard setup for Python projects. The inner dogbert folder containing __init__.py is where all our library Python files will go. If you write tests (and you should!), those go in the tests folder.

We cd into the outer dogbert folder, and run poetry install to initialize a new pyenv environment for our project, and make sure any needed dependencies are installed.

We can add any pip dependencies our project needs with poetry add <dependency>. Finally, when we want to publish our library on PyPI so it can be installed with pip install dogbert, we just run the following 2 commands:

poetry build

poetry publish

And that’s it, our library is on PyPI! There’s really not much to it, that’s all it takes to package and publish a library to PyPI.

Imports and scripts in Poetry

If you’re used to just writing standalone python scripts in a single file and running them with python my_file.py, Poetry might seem strange at first. If we have the file utils.py at dogbert/utils.py with a function called preprocess(), and we have have another file which wants to import that preprocess function, we can import it like below:

from dogbert.utils import preprocess

Poetry creates its own pyenv enviroment so different projects have independent sets of installed Python modules. This is great, but it means that instead of directly running python, you need to prefix all commands on the CLI with poetry run so the correct pyenv is used. Also, for scripts, it’s best to run them using python’s module flag. Where you may be used to directly running a script file with python path/to/script.py, when using Poetry you’d instead run poetry run python -m path.to.script. If we had a script called train.py at dogbert/scripts/train.py, we could run that with poetry run python -m dogbert.scripts.train.

This may take some getting used-to initially, but it’s a minor workflow change which quickly becomes second-nature.

Aside: Poetry with Pytorch

I’ve had issues in the past with adding Pytorch as a dependency from Poetry since Pytorch has multiple versions with different CUDA requirements, which Poetry doesn’t handle well. I find it’s best to simply leave it to the end-user of your library to install Pytorch, and not try to force it via poetry add torch, since it’s easy to end up with a non-CUDA version of pytorch that way. Oftentimes, if you’re relying on libraries like pytorch-lightning or other popular machine learning libraries, they’ll already handle making sure PyTorch is installed. If you want to include torch as a dependency, I’d recommend adding it as a dev dependency poetry add --group dev torch so you won’t accidentally end up with a CPU-only version of PyTorch being installed for end-users of your library. Hopefully this will be handled better in future versions of Poetry/PyTorch!

Bonus points: Make the most common use-case easy

Whatever users of your code are most likely to want to do should “just work” out of the box if possible. For instance, in the case of our DogBert image classification paper example, the most likely thing a user would want to do with our code is to classify images with our pretrained model. We should make this use-case as painless as possible. For instance, we can upload our pretrained model to the Huggingface Model Hub and then have our code automatically download and use that pretrained model if the user doesn’t specify a different model to use. If you want to upload your pretrained model somewhere else, that’s fine, just make sure your library can auto-download it by default so your code can “just work”.

from dogbert import DogbertModel

# default case, auto-download our pretrained model from Huggingface

model = DogbertModel()

# allow the user to specify their own model if they want

model = DogbertModel("/path/to/model")

Use a linter

Nothing makes me doubt the results of a paper more than opening up the code the paper links to and seeing unused variables and linting errors strewn throughout the files. Linting errors like this are the coding equivalent of submitting a paper to journal written in crayon. Fortunately, this is easy to remedy by just using a linter during development.

Linters like Flake8 or Pylint can check your code for common code-quality issues like unused variables and report them as errors. All popular code editors have plugins for Python linters which will highlight linting errors directly in your code. You can also customize the errors the linters report if there are types of errors you want to ignore. Linting errors ofter correspond to real bugs in your code, and are an easy way to improve your code quality at almost no cost. There’s really no downside to using a linter.

Related to linters are code formatters like Black. Black will automatically format your code for you so the formatting is consistent, and takes away the need for you to think about formatting entirely. Personally, I think code formatters are great and recommend using Black, but this isn’t a universal opinion in the Python world. I’d recommend experimenting with this and see if you like it. There are plugins for all code editors which will let you automatically run Black whenever you save a Python file, which makes it really seamless.

Type hints

Type hinting is a new addition to the Python world, but is something I’m a big fan of. Type hints take some getting used to initially, but the payoff is worth it. Adding type hints to your code allows the editor to auto-suggest variable and method names to you, and automatically tell if you if you mistyped some parameter name somewhere rather than crashing at runtime. Furthermore, users of your code will benefit from your type hints since then your library functions and parameter names will autocomplete in their editor too! If you use type hints, you also need to use a type checker to make the sure types are correct as Python will not do this for you. The two most popular are MyPy and PyRight. These work like linters, and can easily be added to your editor to automatically report type errors as you code.

I also recommend moving away from using Python dictionaries to pass structured data around and instead using dataclasses. Dataclasses allow you to specify exactly what fields some data should contain, and will ensure those fields exist. This fits in nicely with type hinting since MyPy and other type checkers can verify you’re using the dataclasses correctly, and you never have to worry about accidentally mistyping a key of some python dict ever again.

Writing tests

Testing is something that I didn’t understand the value of until I started working professionally. There’s a natural aversion to writing tests as it feels like a bunch of extra work you need to do, and everyone always feels like they don’t have time for that. However, as I’ve improved as a software engineer and gotten more comfortable with writing tests, I find the exact opposite: I don’t have time not to write tests.

If you don’t add test cases as you code, you’re probably testing manually. However, this manual testing means that anytime you want to make a change to your existing code, either to refactor or to add new features, you’re always terrified you might accidentally break something you already wrote. Then you need to either go back and manually test everything again, likely forgetting something, or you just hack in your change in whatever way is the least likely to break something, resulting in hacks on top of hacks. This “fear-driven development” leads to horrible messes of code that are almost certainly broken and I believe leads to a lot of the code quality issues endemic throughout academic codebases.

Testing doesn’t have to be difficult. If you can test some piece of code in a Pytest test case rather than manually, do it. Some further tips for testing practically:

- Don’t be afraid to make your source code uglier if it helps with testing. If splitting out the core functionality of a function into a separate function is easier to test, do it.

- Don’t worry about “unit” vs “integration” tests - just test however is easiest for you. Feel free to mix hyper-focused tests on small functions with tests that run through your whole model. The important thing is to have tests, no matter what kind.

- If there’s stochastic outputs from a function, it’s fine to just test that the output looks sane (e.g. tensors have the correct sizes, things that should sum to 1 do, etc…). Even basic assertions will still catch a lot of bugs.

- Use approximate assertions to check that outputs are “close enough”. Pytest has

approxwhich lets you write assertions likeassert pytest.approx(x) == 3.1, and torch hasassert torch.allclose(tensor1, tensor2)to check if tensors are “close enough”. - Don’t be afraid to hardcode output snapshots into tests to aid with algorithm refactoring. It can be helpful to set a random seed and pin down exact outputs so that you can refactor a function for performance and want to make sure you have identical behavior before and after.

- Feel free to create tiny toy datasets inside of tests, e.g. a list with 5 training examples, and run training on it just to make sure everything works.

- Use tests as way to interacticely debug your code as you develop. You can stick a debugger statement

import pdb; pdb.set_trace()into your code, then run a test that runs through that code path so you can interactively experiment as you work.

Pet peeves with research code

The following are a few things that drive me crazy when reading research code. These are just my personal preferences, so YMMV.

- Dead code should be deleted: Frequently the code that accompanies papers is strewn with code paths that are never used, which makes it extremely hard to understand what’s going on. If you have code you’re not using, delete it, especially in the code you release along with your paper. Git maintains a version history so you can go back and find it later if you want.

- Avoid multiple return types from functions: I frequently see functions that sometimes return a string, sometimes an int, sometimes a dictionary, depending on a bunch of input parameters. This makes it incredibly difficult to understand what a function is doing and what to expect from it. Just stick with 1 return type per function. Most linters will complain about this as well.

- Avoid reinventing the wheel: I’ll often see research code that implements from scratch math operations that exist already in numpy or PyTorch. Anytime you write code, there’s a chance your code has bugs, so if there’s a function in a well-established battled-tested library like sklearn or numpy, you should always use that version over writing your own.

- Avoid 1-char variable names: I know this comes from math, where everyone uses a single obscure greek letter for everything, but this makes it difficult to understand what things are referring to in code. IMO it’s always more understandable when a variable has a name like

classification_vectorrather than justc.

Takeaways

If there’s a single thing I want to leave you with, it’s that code published along with research papers should be usable by others. If you can accomplish that, you’re most of the way there. I believe that everything discussed in this article is very achievable for researchers, and is a lot easier than doing research itself, or learning LaTeX, or publishing papers. Researchers are smart people, and none of this is difficult. Once you get used to packaging code into a library that can be installed with pip install, it becomes second-nature, and the benefits to your success as a researcher and to others who want to use your code are immense.

For further reading, I’d recommend this excellent article on Python best practices. It’s a couple years old at this point, but I think the ideas in the article are still very valid today.